Chat with Multiple PDFs Using Langchain and Gemini Pro

Embarking on the journey to harness the power of AI for interacting with multiple PDFs, Langchain and Gemini Pro emerge as groundbreaking tools that redefine our approach to document management and information retrieval. In an age where data is as vast as it is varied, the ability to seamlessly converse with a multitude of PDF documents simultaneously not only enhances productivity but also unlocks new avenues for knowledge discovery. This blog post delves into the innovative integration of Langchain with Gemini Pro, offering a comprehensive guide on how you can leverage these advanced technologies to chat with multiple PDFs efficiently.

We'll explore the core features of Langchain and Gemini Pro, highlighting their unique capabilities to process, understand, and respond to the content within PDF documents. Whether you're a researcher sifting through academic papers, a professional navigating extensive reports, or simply someone looking to extract information from various PDFs without manually flipping through each page, this guide will illuminate the path to achieving streamlined communication with your documents.

.env File

First step we will need to acquire an API key from Google AI and store the API key in a .env file.

GOOGLE_API_KEY='[Your API Key]'requirements.txt File

Create a requirements.txt file and with the information below, which is a list of Python packages that are necessary for the project to run.

streamlit

google-generativeai

python-dotenv

langchain

PyPDF2

faiss-cpu

langchain_google_genaistreamlit: Streamlit is an open-source Python library that makes it easy to create and share beautiful, custom web apps for machine learning and data science. In just a few minutes you can build and deploy powerful data apps, making it a popular choice for rapid prototyping and presentation of data-driven insights.

google-generativeai: Google's tools or APIs for generative AI models. Google offers several AI and machine learning services that include generative AI capabilities, such as text generation, image generation, and more, through its cloud platform. These tools allow developers to integrate advanced AI features into their applications.

python-dotenv:

python-dotenvis a Python library that reads key-value pairs from a.envfile and adds them to the environment variable. It is commonly used to manage application configurations or sensitive information (like API keys) outside of the main codebase, enhancing security and flexibility in development and deployment environments.langchain: Langchain is a library designed to facilitate the creation of applications that leverage language models. It provides tools and abstractions to easily integrate language AI into products, making it simpler to build applications that require natural language understanding and generation.

PyPDF2: PyPDF2 is a Python library for reading and writing PDF files. It allows you to split, merge, crop, and transform the pages of PDF files, as well as extract text and metadata from PDFs. It's a useful tool for any project that needs to programmatically interact with PDF documents.

faiss-cpu: FAISS (Facebook AI Similarity Search) is a library developed by Facebook's AI Research team for efficient similarity search and clustering of dense vectors. The

faiss-cpuversion is specifically compiled for use on systems without GPU acceleration. It's widely used in deep learning applications where fast nearest neighbor search in large vector spaces is required.langchain_google_genai: Langchain with Google's Generative AI services.

app.py File

Now that we’ve set up our environment we can now create our app.py file. First we need to import necessary packages.

from langchain_google_genai import GoogleGenerativeAIEmbeddings

import google.generativeai as genai

from langchain.vectorstores import FAISS

from langchain_google_genai import ChatGoogleGenerativeAI

from langchain.chains.question_answering import load_qa_chain

from langchain.prompts import PromptTemplate

from dotenv import load_dotenvNext we need to load our Google API keys from our .env file.

load_dotenv()

os.getenv("GOOGLE_API_KEY")

genai.configure(api_key=os.getenv("GOOGLE_API_KEY"))We need to create a function that takes a list of PDF documents as input. It initializes an empty string `text` and iterates over each PDF document. For each document, it creates a `PdfReader` object to read the pages. It then iterates over each page in the PDF and extracts the text, appending it to the `text` string. Finally, it returns the concatenated text from all PDFs.

def get_pdf_text(pdf_docs):

text=""

for pdf in pdf_docs:

pdf_reader= PdfReader(pdf)

for page in pdf_reader.pages:

text+= page.extract_text()

return textNext we need to create a function to split a large text into manageable chunks. It uses a hypothetical `RecursiveCharacterTextSplitter` to divide the text into chunks of a specified size (`chunk_size=10000` characters) with a certain overlap between chunks (`chunk_overlap=1000` characters). This is useful for processing large texts in systems with limited memory or for feeding the text into models that have a maximum input size. The function returns a list of text chunks.

def get_text_chunks(text):

text_splitter = RecursiveCharacterTextSplitter(chunk_size=10000, chunk_overlap=1000)

chunks = text_splitter.split_text(text)

return chunksThe ‘get_vector_store’ function converts the text chunks into vector embeddings using a hypothetical `GoogleGenerativeAIEmbeddings` model. These embeddings are then stored in a FAISS index for efficient similarity search. The `FAISS.from_texts` method likely converts the chunks of text into vectors and creates the index. The index is saved locally with the filename `faiss_index`. This setup is useful for quickly finding the most relevant text chunks in response to queries.

def get_vector_store(text_chunks):

embeddings = GoogleGenerativeAIEmbeddings(model = "models/embedding-001")

vector_store = FAISS.from_texts(text_chunks, embedding=embeddings)

vector_store.save_local("faiss_index")The ‘get_converstational_chain‘ function sets up a conversational model chain using LangChain and a Google Generative AI model named "gemini-pro". It defines a prompt template for the model, instructing it to answer questions based on the provided context. The function returns a conversational chain that can be used to generate responses to user queries.

def get_conversational_chain():

prompt_template = """

Answer the question as detailed as possible from the provided context, make sure to provide all the details, if the answer is not in

provided context just say, "answer is not available in the context", don't provide the wrong answer\n\n

Context:\n {context}?\n

Question: \n{question}\n

Answer:

"""

model = ChatGoogleGenerativeAI(model="gemini-pro",

temperature=0.3)

prompt = PromptTemplate(template = prompt_template, input_variables = ["context", "question"])

chain = load_qa_chain(model, chain_type="stuff", prompt=prompt)

return chainThe ‘user_input‘ function handles user queries. It loads the pre-saved FAISS index and uses it to find the text chunks most similar to the user's question. It then gets a conversational chain and uses it to generate a response to the question based on the relevant text chunks. The response is printed and also displayed on the Streamlit application interface.

def user_input(user_question):

embeddings = GoogleGenerativeAIEmbeddings(model = "models/embedding-001")

new_db = FAISS.load_local("faiss_index", embeddings)

docs = new_db.similarity_search(user_question)

chain = get_conversational_chain()

response = chain(

{"input_documents":docs, "question": user_question}

, return_only_outputs=True)

print(response)

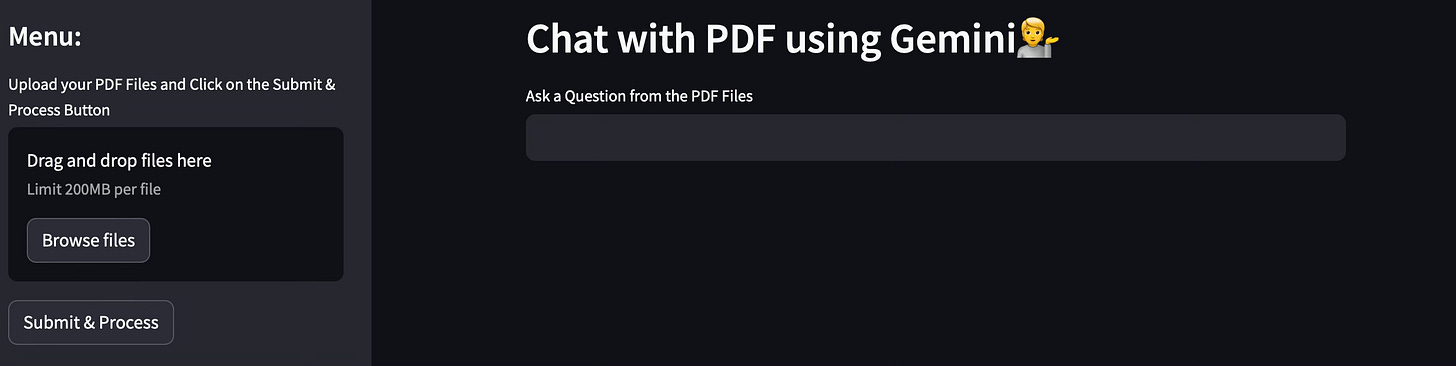

st.write("Reply: ", response["output_text"])This last function sets up the Streamlit application. It configures the page, sets up a header, and creates an input field for user questions. If a question is asked, it calls `user_input` to handle the question. Additionally, it provides a sidebar for users to upload PDF files and process them. Upon clicking the "Submit & Process" button, it reads the uploaded PDFs, extracts text, splits the text into chunks, and stores the chunks in a FAISS index for later querying.

def main():

st.set_page_config("Chat PDF")

st.header("Chat with PDF using Gemini💁")

user_question = st.text_input("Ask a Question from the PDF Files")

if user_question:

user_input(user_question)

with st.sidebar:

st.title("Menu:")

pdf_docs = st.file_uploader("Upload your PDF Files and Click on the Submit & Process Button", accept_multiple_files=True)

if st.button("Submit & Process"):

with st.spinner("Processing..."):

raw_text = get_pdf_text(pdf_docs)

text_chunks = get_text_chunks(raw_text)

get_vector_store(text_chunks)

st.success("Done")

if __name__ == "__main__":

main()Execution Flow

1. The user uploads PDF files and clicks "Submit & Process".

2. The application extracts text from the PDFs, splits the text into chunks, and stores vector representations of these chunks in a FAISS index.

3. The user enters a question.

4. The application uses the FAISS index to find relevant text chunks, generates a response using the conversational AI model, and displays the response.

In conclusion, leveraging Langchain to chat with multiple PDFs offers a transformative approach to handling and extracting information from extensive text-based resources. This innovative method simplifies the process of querying vast amounts of data contained within PDFs, making it accessible and interactive. By converting static documents into a dynamic conversation, users can effortlessly navigate through complex information, obtain precise answers, and gain insights that would otherwise require tedious manual search and analysis. Whether for academic research, business intelligence, or personal knowledge management, Langchain's capabilities empower users to unlock the full potential of their PDF archives in a conversational, efficient, and user-friendly manner. As we continue to explore the boundaries of what's possible with AI and document interaction, Langchain stands out as a powerful tool that redefines our engagement with digital documents, setting a new standard for information retrieval and utilization in the digital age.

If you have any questions or would like to learn more about the topics discussed in this post, please don't hesitate to reach out to me. I'm always happy to help and provide guidance. Feel free to drop me a line in the comments.